Introduction

This is written in the spirit of the Request for Comments memorandums that shaped the early Internet. RFCs, as they are known, are submitted to propose a technology or methodology and gather comments/corrections from relevant and knowledgeable community members in the hope of becoming a widely accepted standard.

Purpose

This is a consideration of the information technology options for politically and socially active organizations. It’s also a high level overview of the technical landscape. The target audience is technical decision makers in groups whose political commitments challenge the prevailing order, focused on liberation. In this document, I will provide a brief history of past patterns and compare these to current choices, identifying the problems of various models and potential opportunities.

Alongside this blog post there is a living document posted for collaboration here. I invite a discussion of ideas, methods and technologies I may have missed or might be unaware of to improve accuracy and usefulness.

Being Intentional About Technology Choices

It is a truism that modern organizations require technology services. Less commonly discussed are the political, operational, cost and security implications of this dependence from the perspective of activists. It’s important to be intentional about technological choices and deployments with these and other factors in mind. The path of least resistance, such as choosing Microsoft 365 for collaboration rather than building on-premises systems, may be the best, or least terrible choice for an organization but the decision to use it should come after weighing the pros and cons of other options. What follows is not an exhaustive history; I am purposefully leaving out many granular details to get to the point as efficiently as possible.

A Brief History of Organizational Computing

By ‘organizational computing’ I’m referring to the use of digital computers arranged into service platforms by non-governmental and non-military organizations. In this section, there is a high level walk through of the patterns which have been utilized in this sector.

Mainframes

IBM 360 in Computer Room – mid 1960s

The first use of digital computing at-scale was the deployment of mainframe systems as centrally hosted resources. User access, limited to specialists, was provided via a time sharing method in which ‘dumb’ terminals displayed results of programs and enabled input (punch cards were also used for inputting program instructions). One of the most successful systems was the IBM 360 (operational from 1965 to 1978). Due to expense, the typical customer was large banks, universities and other organizations with deep pockets.

Client Server

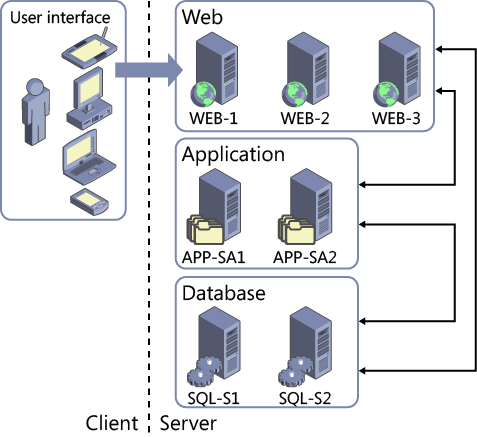

Classic Client Server Architecture (Microsoft)

The introduction of personal computers in the 1980s created the raw material for the development of networked, smaller scale systems that could supplement mainframes and provide organizations with the ability to host relatively modest computing platforms that suited their requirements. By the 1990s, this became the dominant model used by organizations at all scales (mainframes remain in service but the usage profile became narrower – for example, to run applications requiring greater processing capability than what’s possible using PC servers).

The client server model era spawned a variety of software applications to meet organizational needs such as email servers (for example, Sendmail and Microsoft Exchange), database servers (for ex. Postgres and SQL Server), web servers such as Apache and so on. Companies such as Novell, Cisco, Dell and Microsoft rose to prominence during this time.

As the client server era matured and the need for computing power grew, companies like VMWare sold platforms that enabled the creation of virtual machines (software mimics of physical servers). Organizations that could not afford to own or rent large data centers could deploy the equivalent of hundreds or thousands of servers within a smaller number of more powerful (in terms of processing capacity and memory) computing systems running VMWare’s ESX software platform. Of course, the irony of this return to something like a mainframe was not lost on information technology workers whose careers spanned the mainframe to client server era.

Cloud computing

Cloud Pattern (Amazon Web Services)

Virtualization, combined with the improved Internet access of the early 2000s, gave rise to what is now called ‘cloud.’ Among information technology workers, it was popular to say ‘there is no cloud, it’s just someone else’s computer.’ Overconfident cloud enthusiasts considered this to be the complaint of a fading old guard but it is undeniably true.

The Cloud Model

There are four modes of cloud computing:

- Infrastructure as a service – IaaS: (for example, building virtual machines on platforms such as Microsoft Azure, Amazon Web Services or Google Cloud Platform)

- Platform as a service – PaaS: (for example, databases offered as a service utility eliminating the need to create a server as host)

- Software as a Service – SaaS: (platforms like Microsoft 365 fall into this category)

- Function as a Service – FaaS: (focused on deployment using software development – ‘code’ – alone with no infrastructural management responsibilities)

A combination of perceived (but rarely realized) convenience, marketing hype and mostly unfulfilled promises of lower running costs have made the cloud model the dominant mode of the 2020s. In the 1990s and early 2000s, an organization requiring an email system was compelled to acquire hardware and software to configure and host their own platform (the Microsoft Exchange email system running on Dell server or VMWare hardware was a common pattern). The availability of Office 365 (later, Microsoft 365) and Google’s G-Suite provided another, attractive option that eliminated the need to manage systems while providing the email function.

A Review of Current Options for Organizations

Although tech industry marketing presents new developments as replacing old, all of the pre-cloud patterns mentioned above still exist. The question is, what makes sense for your organization from the perspectives of:

- Cost

- Operational complexity

- Maintenance complexity

- Security and exposure to vulnerabilities

- Availability of skilled workers (related to the ability to effectively manage all of the above)

We needn’t include mainframes in this section since they are cost prohibitive and today, intended for specialized, high performance applications.

Client Server (on-premises)

By ‘on-premises’ we are referring to systems that are not cloud-based. Before the cloud era, the client server model was the dominant pattern for organizations of all sizes. Servers can be hosted within a data center the organization owns or within rented space in a colocation facility (a business that provides rented space for the servers of various clients).

Using a client server model requires employing staff who can install, configure and maintain systems. These skills were once common, indeed standard, and salaries were within the reach of many mid-size organizations. The cloud era has made these skills harder to come by (although there are still many skilled and enthusiastic practitioners). A key question is, how much investment does your organization want to make in the time and effort required to build and manage its own system? Additional questions for consideration come from software licensing and software and hardware maintenance cycles.

Sub-categories of client server to consider

Virtualization and Hyper-converged hardware

As mentioned above, the use of virtualization systems, offered by companies such as VMWare, was one method that arose during the heyday of client server to address the need for more concentrated computing power in a smaller data center footprint.

Hyper-converged infrastructure (HCI) systems, combining compute, storage and networking into a single hardware chassis, is a further development of this method. HCI systems and virtualization reduce the required operational overhead. More about this later.

Hybrid architectures

A hybrid architecture uses a mixture of on-premises and off-site, typically ‘cloud’ based systems. For example, an organization’s data might be stored on-site but the applications using that data are hosted by a cloud provider.

Cloud

Software as a Service

Software as a Service platforms such as Microsoft 365 are the most popular cloud services used by firms of all types and sizes, including activist groups. The reasons are easy to understand:

- Email services without the need to host an email server

- Collaboration tools (SharePoint and MS Teams for example) built into the standard licensing schemes

- Lower (but not zero) operational responsibility

- Hardware maintenance and uptime are handled by the service provider

The convenience comes at a price, both financial, as licensing costs increase and operational inasmuch as organizations tend to place all of their data and workflows within these platforms, creating deep dependencies.

Build Platforms

The use of ‘build platforms’ like Azure and AWS is more complex than the consumption model of services such as Microsoft 365. Originally, these were designed to meet the needs of organizations that have development and infrastructure teams and host complex applications. More recently, the ‘AI’ hype push has made these platforms trojan horses for pushing hyperscale algorithmic platforms (note, as an example, Microsoft’s investment in and use of OpenAI’s Large Language Model kit) The most common pattern is a replication of large-scale on-premises architectures using virtual machines on a cloud platform.

Although marketed as superior to, and simpler than on-premises options, cloud platforms require as much, and often more technical expertise. Cost overruns are common; cloud platforms make it easy to deploy new things but each item generates a cost. Even small organizations can create very large bills. Security is another factor; configuration mistakes are common and there are many examples of data breaches produced by error.

Private Cloud

The potential key advantage of the cloud model is the ability to abstract technical complexity. Ideally, programmers are able to create applications that run on hardware without the requirement to manage operating systems (a topic outside of the scope of this document). Private cloud enables the staging of the necessary hardware on-premises. A well known example is Openstack which is very technically challenging. Commercial options include Microsoft’s Azure Stack which extends the Azure technology method to hyper converged infrastructure (HCI) hosted within an organization’s data center.

Information Technology for Activists – What is To Be Done?

In the recent past, the answer was simple: purchase hardware and software and install and configure it with the help of technically adept staff, volunteers or a mix. In the 1990s and early 2000s it was typical for small to midsize organizations to have a collection of networked personal computers connected to a shared printer within an office. Through the network (known as a local area network or LAN) these computers were connected to more powerful computers called servers that provide centralized storage and the means through which each individual computer could communicate in a coordinated manner and share resources. Organizations often hosted their own websites which were made available to the Internet via connections from telecommunications providers.

Changes in the technology market since the mid 2000s, pushed to increase the market dominance and profits of a small group of firms (primarily, Amazon, Microsoft and Google) have limited options even as these changes appear to offer greater convenience. How can these constraints be navigated?

Proposed Methodology and Doctrines

Earlier in this document, I mentioned the importance of being intentional about technology usage. In this section, more detail is provided.

Let’s divide this into high level operational doctrines and build a proposed architecture from that.

First Doctrine: Data Sovereignty

Organizational data should be stored on-premises using dedicated storage systems rather than in a SaaS such as Microsoft 365 or Google Workspace

Second Doctrine: Bias Towards Hybrid

By ‘hybrid’ I am referring to system architectures that utilize a combination of on-premises and ‘cloud’ assets

Third Doctrine: Bias Towards System Diversity

This might also be called the right tool for the right job doctrine. After consideration of relevant factors (cost, technical ability, etc) an organization may decide to use Microsoft 365 (for example) to provide some services but other options should be explored in the areas of:

- Document management and related real time collaboration tooling

- Online Meeting Platforms

- Database platforms

- Email platforms

Commercial platforms offer integration methods between platforms that make it possible to create an aggregated solution from disparate tools.

These doctrines can be applied as guidelines for designing an organizational system architecture:

The above is only one option. More are possible depending on the aforementioned factors of:

- Cost

- Operational complexity

- Maintenance complexity

- Security and exposure to vulnerabilities

- Availability of skilled workers (related to the ability to effectively manage all of the above)

I invite others to add to this document to improve its content and sharpen the argument.

Activist Documents and Resources Regarding Alternative Methods

Counter Cloud Action Plan – The Institute for Technology In the Public Interest

https://titipi.org/pub/Counter_Cloud_Action_Plan.pdf

Measurement Network

“measurement.network provides non-profit network measurement support to academic researchers”

Crisis, Ethics, Reliability & a measurement.network by Tobias Fiebig Max-Planck-Institut für Informatik Saarbrücken, Germany

https://dl.acm.org/doi/pdf/10.1145/3606464.3606483

Tobias Fiebig Max-Planck-Institut für Informatik and Doris Aschenbrenner Aalen University

https://dl.acm.org/doi/pdf/10.1145/3538395.3545312

Decentralized Internet Infrastructure Research Group Session Video

“Oh yes! over-preparing for meetings is my jam :)”:The Gendered Experiences of System Administrators

https://dl.acm.org/doi/pdf/10.1145/3579617

Revolutionary Technology: The Political Economy of Left-Wing Digital Infrastructure by Michael Nolan

References in the Post

RFC

https://en.wikipedia.org/wiki/Request_for_Comments

Openstack

https://en.wikipedia.org/wiki/OpenStack

Self Hosted Document Management Systems

https://noted.lol/self-hosted-dms-applications/

Overview

https://noted.lol/self-hosted-dms-applications/

Teedy

https://teedy.io/?ref=noted.lol#!/

Only Office

https://www.onlyoffice.com/desktop.aspx

Digital Ocean

IBM 360 Architecture

https://www.researchgate.net/figure/BM-System-360-architectural-layers_fig2_228974972

Client Server Model

https://en.wikipedia.org/wiki/Client–server_model

Mainframe

https://en.wikipedia.org/wiki/Mainframe_computer

Virtual Machine

https://en.wikipedia.org/wiki/Virtual_machine

Server Colocation

https://www.techopedia.com/definition/29868/server-colocation

I’ve been thinking a lot about this as well and agree with a lot of this. I’ve gotten as far as standing up my own on site data storage with VPN for remote access across my network of clients and colleagues, though my use cases remain largely in the amateur space compared to fully professional / large scale organization level workloads.

Regarding chat / meeting services, we’ve been exploring alternatives that are self hostable with https://matrix.org/ and to a lesser extent https://jitsi.github.io/handbook/docs/devops-guide/ have been promising (with federation available for wider access). They remain lagging behind in terms of user-end features but the Matrix / Element system has gotten the job done alright so far for our purposes.

The last, again amateur comment I have is I’ve been thinking about uptime, resource sharing, and data redundancy a lot. One of the biggest pros of SaaS / Cloud is general redundancy and on demand resource allocation that is quicker (and “cheaper”) to implement than sourcing hardware management & resources yourself.

One of my colleagues and I were thinking about collecting older hardware from our various other colleagues and friends and building out our own distributed web of virtualized computing resources, some way to provide both geographical distribution (and thus redundancy should one site go down) and some semblance of on demand resource allocation, I guess we were thinking essentially of something like OpenStack.

I can’t help but feel like some sort of cooperative organized timeshare type “cloud” alternative could be potentially useful and disruptive to the dominant methodology, where multiple organizations pool resources for a shared cooperative cloud model. Obviously that raises some security concerns with a wider and more open distributive network and “trust” but it strikes me as something that could still be workable for a number of use-cases. I think if the “web” of involved organizations grows enough it could combine with cooperative / activist maker spaces as neutral locations to host some of the hardware or maybe a network of hardware hosted at local libraries could help create expanded footprint and better accessibility / uptime. I also feel that some way to repurpose older hardware as lower energy (undervolted, or otherwise clocked down to help lower energy costs) distributed computing resources is also something we ought to think more about writ large. A lot ends up as waste when it could still be useful (though energy costs might make such notions more prohibitive).

LikeLike